by George Crump Storage Switzerland

In terms of storage performance, the actual drive is no longer the bottleneck. Thanks to flash storage, attention has turned to the hardware and software that surrounds them, especially the capabilities of the CPU that drives the storage software. The importance of CPU power is evidenced by the increase in overall storage system performance when an all-flash array vendor releases a new storage system. The flash media in that system doesn’t change, but overall performance does increase. But that increase in performance is not as optimal as it should be. The lack of achieving optimal performance is a result of storage software not taking advantage of the parallel nature of the modern CPU.

Moore’s Law Becomes Moore’s Suggestion

Moore’s Law is an observation by Intel co-founder Gordon Moore. The simplified version of this law states that number of transistors will double every two years. IT professionals assumed that meant that the CPU they buy would get significantly faster every two years or so. Traditionally, this meant that the clock speed of the processor would increase, but recently Intel has hit a wall because increasing clock speeds also led to increased power consumption and heat problems. Instead of increasing clock speed, Intel has focused on adding more cores per processor. The modern data center server has essentially become a parallel computer.

Multiple cores per processor are certainly an acceptable method of increasing performance and continuing to advance Moore’s law. Software, however, does need to be re-written to take advantage of this new parallel computing environment. Parallelization of software is required by operating systems, application software and of course storage software. The re-coding of software to make it parallel is challenging. The key is to manage I/O timing and locking, making multli-threading a storage application more difficult than a video rendering project for example. As a result, it has taken time to get to the point where the majority of operating systems and application software has some flavor of parallelism.

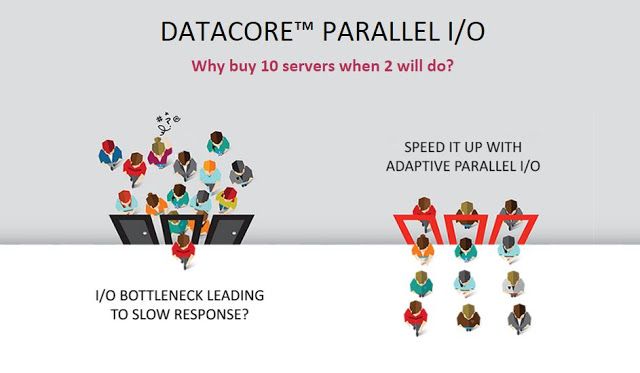

Lagging far behind in the effort to take full advantage of the modern processor is storage software. Most storage software, either built into the array or the new crop of software-defined storage (SDS) solutions, are unable to exploit the wide availability of processing cores. They are primarily single core. As a worst case, they are only using one core per processor; at its best, they are using one core per function. If cores are thought of as workers, it is best to have all the workers available to all the tasks, rather than each worker focused on a single task.

Why Cores Matter

The importance of using cores efficiently has only recently become important. Most legacy storage systems were hard drive based, lacking advanced caching or flash media to drive performance. As a result, the need to support efficiently the multi-core environment was not as obvious as it is now that systems have a higher percentage of flash storage. The lack of multi-core performance was overshadowed by the latency of the hard disk drive. Flash and storage response time is just one side of the I/O equation. On the other side, the data center is now populated with highly dense virtual environments or, even more contentious, hyperconverged infrastructure. Both of these environments generate a massive amount of random I/Os that, thanks to flash, the storage system should be able to handle very quickly. The storage software is the interconnect between the I/O requester and the I/O deliverer and if it can’t efficiently support all the cores it has at its disposal then it becomes the bottleneck.

All storage systems that leverage Intel CPUs face the same challenge; how to leverage CPUs that are increasing in cores, but not in raw speed. In other words, they don’t perform a single process faster but they do perform multiple processes at the same speed simultaneously, netting in faster overall completion time, if the cores are used efficiently. Storage software needs to adapt and become multi-threaded so it can distribute I/O across these functions, taking full advantage of multiple cores.

For most vendors this may mean a complete re-write of their software, which will take time, effort and risk incompatibility with their legacy storage systems.

How Vendor’s Fake Parallel I/O

Vendors have tried several techniques to try to leverage the reality of multiple cores without specifically “parallelizing” their code. Some storage system vendors have tried to tie specific cores to specific storage processing tasks. For example, one core may handle raw inbound I/O while another handles RAID calculations. Other vendors will distribute storage processing tasks in a round robin fashion. If cores are thought of as workers, this technique treats cores as individuals instead of a team. As each task comes in each core is assigned a task, but only that core can work on that task. If it is a big task, it can’t get help from the other cores. While this technique does distribute the load, it doesn’t allow multiple workers to work on the same task at the same time. Each core has to do its own heavy lifting.

Scale-out storage systems are similar in that they leverage multiple processors within each node of the storage cluster, but that are not granular enough to assign multiple cores to the same task. They, like the systems described above, typically have a primary node that acts as a task delegator and assigns the I/O to a specific node, and that specific node handles storing the data and managing data protection.

These designs count on the I/O to come from multiple sources so that each discrete I/O stream can be processed by one of the available cores. These systems will claim very high IOPS numbers, but require multiple applications to get there. They work best in an environment that requires a million IOPS because it has ten workloads all generating 100,000 IOPS instead of an environment that has one workload that generates 1 million IOPS and no other workloads over 5,000. To some extent vendors also “game” the benchmark by varying I/O size and patterns (random vs. sequential) to achieve a desired result. The problem is this I/O is not the same as what customers will see in their data centers.

The Impact of True Parallel I/O

True parallel I/O utilizes all the available cores across all the available processors. Instead of siloing a task to a specific core, it assigns all the available cores to all the tasks. In other words, it treats the cores as members of a team. Parallel I/O storage software works well on either type of workload environment, ten generating 100k IOPS or one generating 1 million IOPS.

Parallel I/O is a key element in powering the next generation data center because the storage processing footprint can be dramatically reduced and can match the reduced footprint of solid-state storage and server virtualization. Parallel I/O provides many benefits to the data center:

- Full Flash Performance

As stated earlier, most flash systems show improved performance when more processing power is applied to the system. Correctly leveraging cores with multi-threading, delivers the same benefit without having to upgrade processing power. If the storage software is truly parallel, then the storage software can deliver better performance with less processing power, which drives costs down while increasing scalability.

- Predictable Hyperconverged Architectures

Hyperconverged architectures are increasing in popularity thanks to available processing power at the computer tier. Hypervisors do a good job of utilizing multi-core processors. The problem is that a single threaded storage software component becomes the bottleneck. Often the key element of hyper-convergence, storage software, is isolated to one core per hyperconverged node. These cores can be overwhelmed if there is a performance spike leading to inconsistent performance that could impact the user experience. Also to service many VMs and critical business applications, they typically need to throw more and more nodes at the problem, impacting the productivity and cost saving benefits derived from consolidating more workload on fewer servers. A storage software solution that is parallel can leverage or share multiple cores in each node. The result is more virtual machines per host, less nodes to manage and more consistent storage I/O performance even under load.

- Scale Up Databases

While they don’t get the hype of modern NoSQL databases, traditional, scale up databases (e.g., Oracle, Microsoft SQL) are still at the heart of most organizations. Because the I/O stream is from a single application, they don’t generate enough independent parallel I/O so it can be distributed to specific cores. The parallel I/O software’s ability to make multiple cores act as one is critical for this type of environment. It allows scale up environments to scale further than ever.

Conclusion

The data center is increasingly becoming denser; more virtual machines are stacked on virtual hosts, legacy applications are expected to support more users per server, and more IOPS are expected from the storage infrastructure. While the storage infrastructure now has the right storage media (flash) in place to support the consolidation of the data center, the storage software needs to support the available compute power. The problem is that compute power is now delivered via multiple cores per processor instead of a single processor. Storage software that has parallel I/O will be able to take full advantage of the processor reality and support these dense architectures with a storage infrastructure that is equally dense.

Read the full article on Storage Swiss: Software-Defined Storage meets Parallel I/O