As companies transform themselves by utilizing a mix of digital technologies, they are faced with increasing amounts of data. From additional data sources (such as social media for a consumer oriented company or sensor data for a manufacturing company) to leveraging more historical data (for determining seasonal pricing trends), companies are using more data in their analytics.

But, with this increase in data, companies are finding that all this data is slowing down their analysis, blowing past their windows for getting the results from their applications. This has a direct impact on their business. For example, a company that needs to evaluate their inventory to determine their pricing specials for the day may not get their deals determined before their stores open.

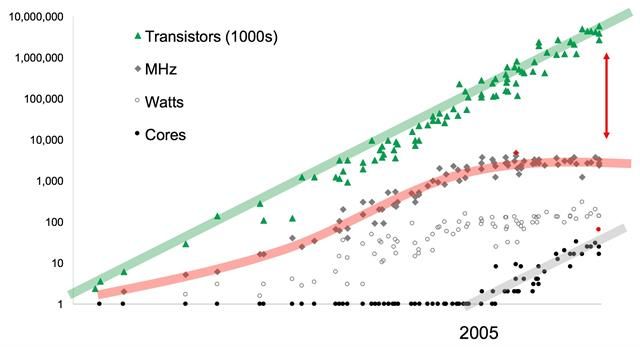

Where’s the bottleneck for these slow applications? The first place IT looks at is the host. If the server is more than a few years old, companies will often upgrade the host to a modern, multi-processor / multi-core system with lots of RAM. Today’s servers are pretty powerful when it comes to compute power as the increase in transistors due to Moore’s Law has resulted in CPUs with more and more cores.

But, the increase in cores has come at the expense of clock speed. In fact, clock speed has barely budged with the increase in transistors. This has led to a second problem which is the I/O gap. Most I/O is processed serially and with clock speeds not increasing, there is a now a big difference the work potential of the compute and storage tiers.

In a follow-up blog post, we’ll discuss the I/O gap and how companies are attempting to address this issue.

To improve performance, encourage your IT people to download a trial of MaxParallel. There’s no risk and it’s likely that your entire organization, including IT, will benefit from it. They can test it with no costs as a 30-day trial in their environment today!